Glossopticon VR visualises and sonifies over 1,500 languages of the Pacific region. Languages are mapped by location and number of speakers and you can fly through a spatialised mix of the recorded voices of many of the languages.

This page provides documentation to provide a sense of the experience. A webVR version aimed at a research audience is available to view and interact with here.

Video

Images

Glossopticon VR on display at The Canberra Museum and Art Gallery as part of the UNESCO Memory of the World in Canberra exhibition, 2017

Glossopticon VR on display at The Canberra Museum and Art Gallery as part of the UNESCO Memory of the World in Canberra exhibition, 2017

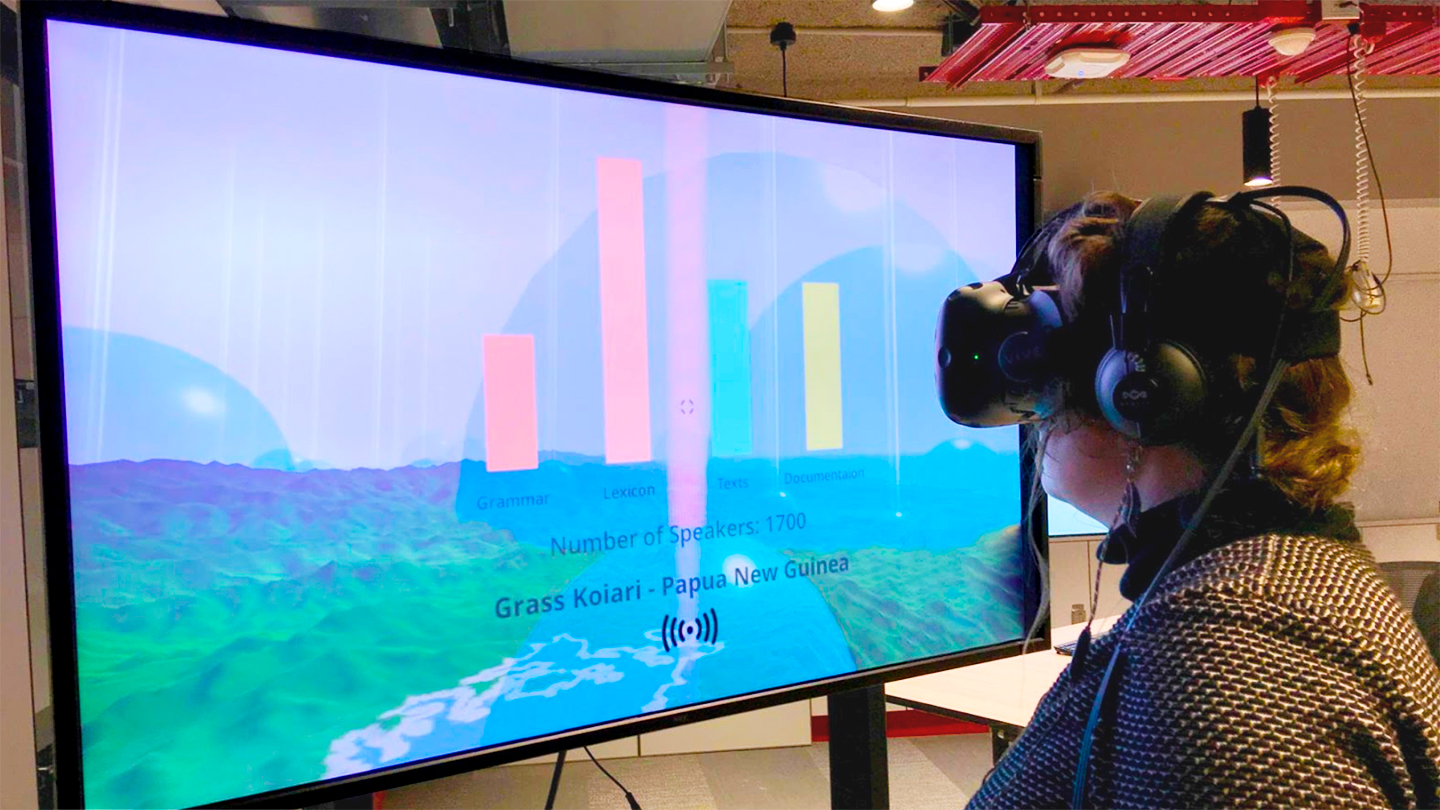

Glossopticon VR on display at University of Melbourne. Picture: Paul Burston

Further Details

Glossopticon is a VR enabled exploration of the data held in the Pacific and Regional Archive for Digital Sources in Endangered Cultures (PARADISEC). PARADISEC is a digital archive containing audio, video, and text data, of languages in the Pacific region.

A user can fly freely in VR over the entire Pacific region and is surrounded by spatialised audio of the nearby languages. Each language can be seen as a beam of light surrounded by a semi-transparent dome representing the number of speakers of this particular language. Further data on particular languages can be accessed through a head up display which can be turned on or off as an overlay to what the viewer sees before them, and also allows the viewer to isolated individual voices of each languages.

Via their interaction within the VR environment, audiences are exposed (often for the first time) to the sheer number of languages in the region, and to the level of endangerment of many of them.

Credits

The Glossopticon VR project is a project by Rachel Hendery (Western Sydney University) and Andrew Burrell (University of Technology Sydney). It’s part of a larger Glossopticon VR and AR exhibition by Nick Thieberger (Melbourne University), Rachel Hendery, and Andrew Burrell. The project was funded by the Centre of Excellence for Language Dynamics (COEDL), through a Transdisciplinary and Innovation Grant.

Rachel Hendery – project conception and linguistics.

Andrew Burrell – VR and data visualisation design and development.