ABOUT

Glossopticon VR visualises and sonifies over 1,500 languages of the Pacific region. Languages are mapped by location and number of speakers and you can fly through a spatialised mix of the recorded voices of many of the languages.

This page allows access to a researcher focused webVR version of the project, along with documentation of the public exhibition version.

The Glossopticon VR project is a project by Andrew Burrell (virtual environment and data visualisation design/development, University of Technology Sydney) and Rachel Hendery (conception and linguistics, Western Sydney University) and Nick Thieberger (linguistics and data, Melbourne University).

The project was funded by the Centre of Excellence for Language Dynamics (COEDL), through a Transdisciplinary and Innovation Grant.

getting started

Clicking the 'Enter glossopticon VR' button will start the VR visualisation.

In the menu, choose the countries' data you would like to view. On a mobile device, performance will be optimal if you choose less countries to view at a time.

Selecting 'show all data points for selected locations' will display all language data, otherwise only languages with associated audio will be shown.

When you are ready click 'submit and build'.

The instructions button can be used to remind yourself of how to navigate and view your collected links.

This project is part of ongoing research and as such is in a slow, yet constant state of development / redevelopment. The primary anticipated audience for Glossopticon VR is other researchers.

navigating

The Glossopticon WebVR visualisation is currently best viewed in desktop mode with a mouse and keyboard or mobile mode as a "magic window"

desktop

Use the W, A, S & D keys to move and the mouse to look around. Holding [SHIFT] and a movement key will speed up your movement to move longer distances quickly.

use the mouse cursor to point and click on the langauge shards for more information and use the icons to save links for later or isolate audio.

mobile

touch and hold the screen to move forward and rotate the device to look around.

use the central circle cursor to select langauge shards by hovering it over them and use the same technique to select the icons to save links for later or isolate audio.

You can view any links you have collected from the visualisation here

Related Publications

Academic

Amanda Harris and Nick Thieberger. 2021. Digital curation and access to recordings of traditional cultural performance. UNESCO Intangible Cultural Heritage Courier of the Asia-Pacific.

Thieberger Nick. 2020. Technology in support of languages of the Pacific: neo-colonial or post-colonial? Asian-European Music Research Journal 5-3 pp: 17-24 https://doi.org/10.30819/aemr.

Andrew Burrell, Rachel hendery and Nick Thieberger. 2019. Glossopticon: Visualising archival data 1 Jul 2019International Conference in Information VisualizationProceedings - 2019 23rd International Conference in Information Visualization - Part II, IV-2 2019100-103 IEEE

Rachel Hendery and Andrew Burrell. 2019. Playful interfaces to the archive and the embodied experience of data. Journal of Documentation76(2):484-501 Emerald Publishing Limited

Nick Thieberger. 2019. Access to recordings in the languages of the Pacific. Australia ICOMOS Historic Environment. Volume 31: 3: pp 98-104 https://search.informit.org/doi/epdf/10.3316/informit.822776130009128

Linda Barwick & Nick Thieberger. 2018. Unlocking the archives. Pp. 135-139 in Vera Ferreira and Nick Ostler (eds) Communities in Control: Learning tools and strategies for multilingual endangered language communities. Proceedings of the 2017 XXI FEL conference. Hungerford: FEL.

Tools/code

The source files for this project are available on github.

https://github.com/clavis-magna/glossopticon-webvr

As an ongoing research project this source code is provided as is, and we welcome input and feedback via pull requests or issues via github.

Exhibition Version

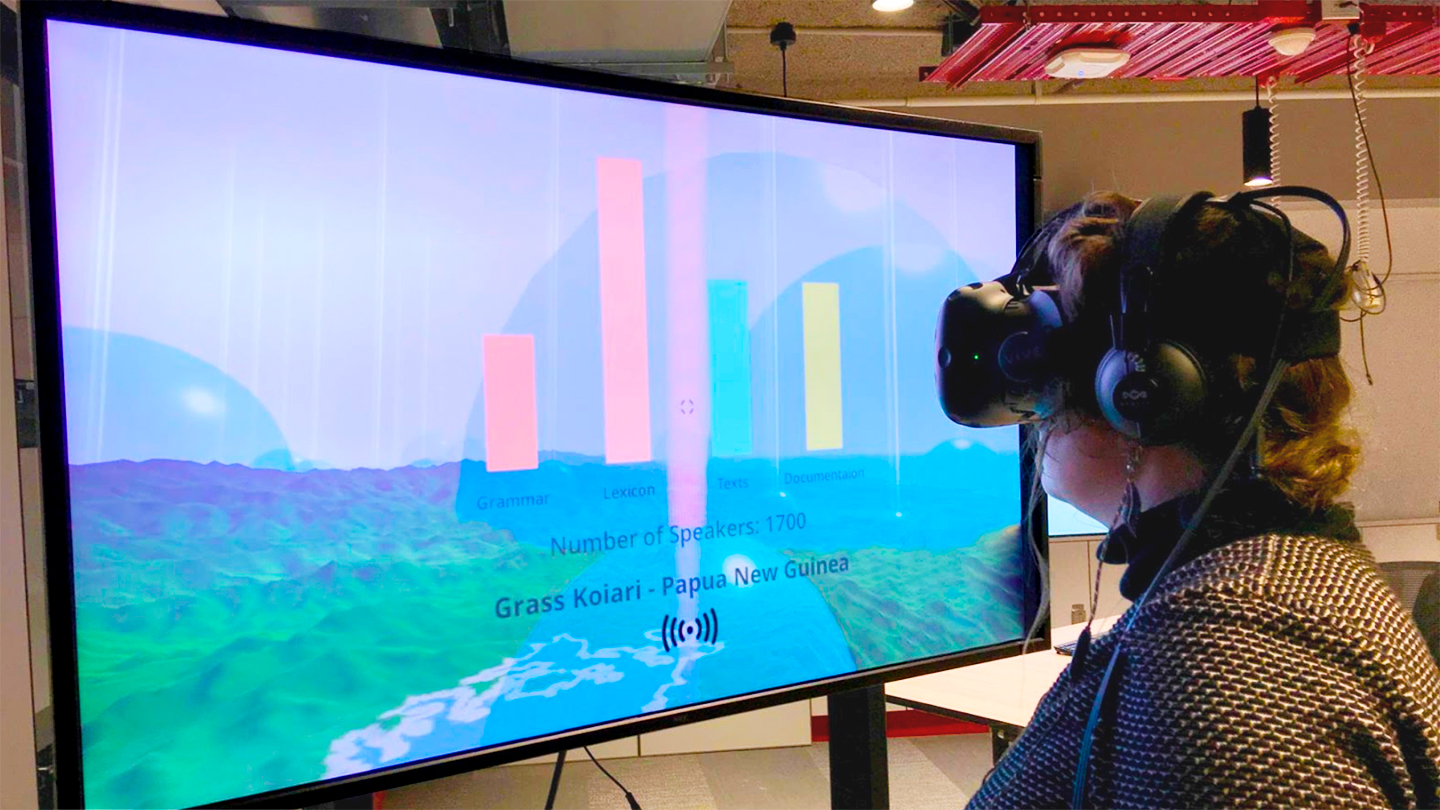

Glossopticon is a VR enabled exploration of the data held in the Pacific and Regional Archive for Digital Sources in Endangered Cultures (PARADISEC). PARADISEC is a digital archive containing audio, video, and text data, of languages in the Pacific region.

A user can fly freely in VR over the entire Pacific region and is surrounded by spatialised audio of the nearby languages. Each language can be seen as a beam of light surrounded by a semi-transparent dome representing the number of speakers of this particular language. Further data on particular languages can be accessed through a head up display which can be turned on or off as an overlay to what the viewer sees before them, and also allows the viewer to isolated individual voices of each languages.

Via their interaction within the VR environment, audiences are exposed (often for the first time) to the sheer number of languages in the region, and to the level of endangerment of many of them.

Video

Images

Glossopticon VR on display at The Canberra Museum and Art Gallery as part of the UNESCO Memory of the World in Canberra exhibition, 2017

Glossopticon VR on display at The Canberra Museum and Art Gallery as part of the UNESCO Memory of the World in Canberra exhibition, 2017

Glossopticon VR on display at University of Melbourne. Picture: Paul Burston